Projects

NOTE: This page is extremely out of date. See a list of projects completed during my PhD under Publications.

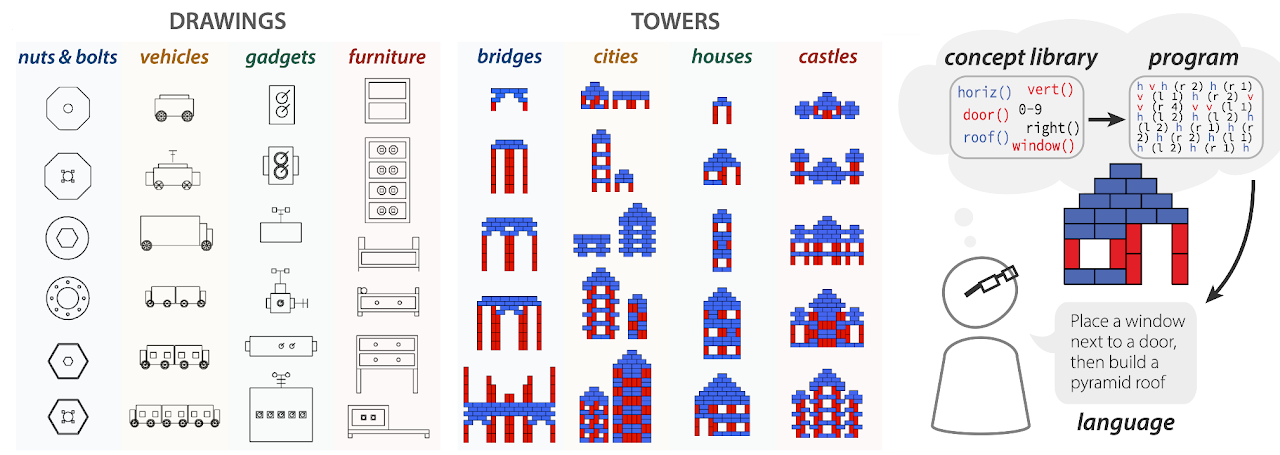

Identifying concept libraries from language about object structure

Our understanding of the visual world goes beyond naming objects --- encompassing our ability to parse objects into meaningful parts, attributes, and relations. In this work, we leverage natural language descriptions for a diverse set of 2K procedurally generated objects to identify the parts people use and the principles leading these parts to be favored over others... (read full abstract below)

Paper Project Website

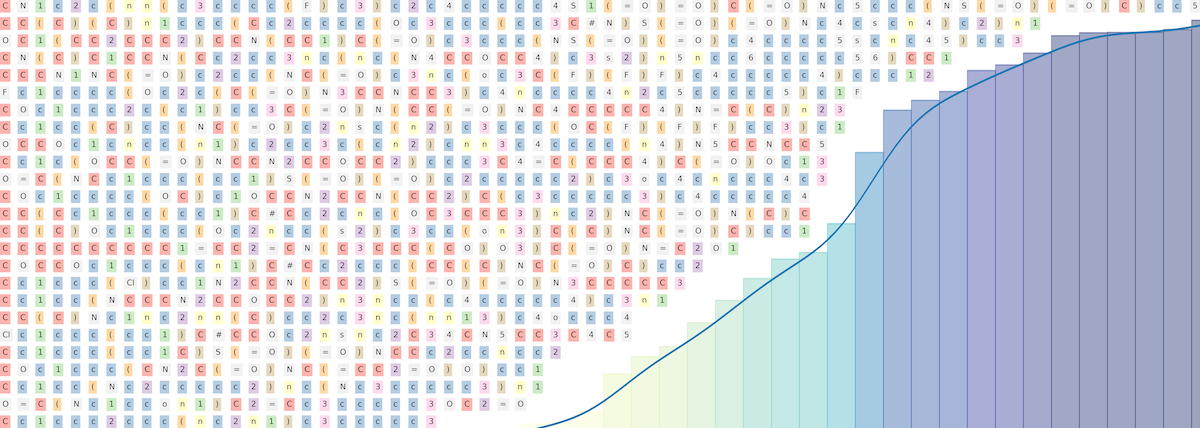

Training Transformers for Practical Drug Discovery with Tensor2Tensor

Despite the recent successes of Transformers in NLP, adoption of this architecture within cheminformatics has so far been limited to sequence-to-sequence based tasks like reaction prediction – just one class of tasks in the rapidly-growing problem space of molecular machine learning. This blog post for Reverie Labs (and accompanying Colab notebook) walks through a complete pipeline for training Transformer networks on a wide range of drug discovery tasks using Tensor2Tensor.

Blog Post Colab Notebook

Designing a User-Friendly ML Platform with Django

Building useful real-world machine learning systems requires more than just optimizing metrics. In an applied setting like computational chemistry, how can we make the latest ML research user-friendly and accessible for non-expert users? This blog post for Reverie Labs (and accompanying talk) outlines our Django-based production ML platform, which allows users to get predictions from state-of-the-art models with the ease of ordering online takeout.

Blog Post Talk Recording

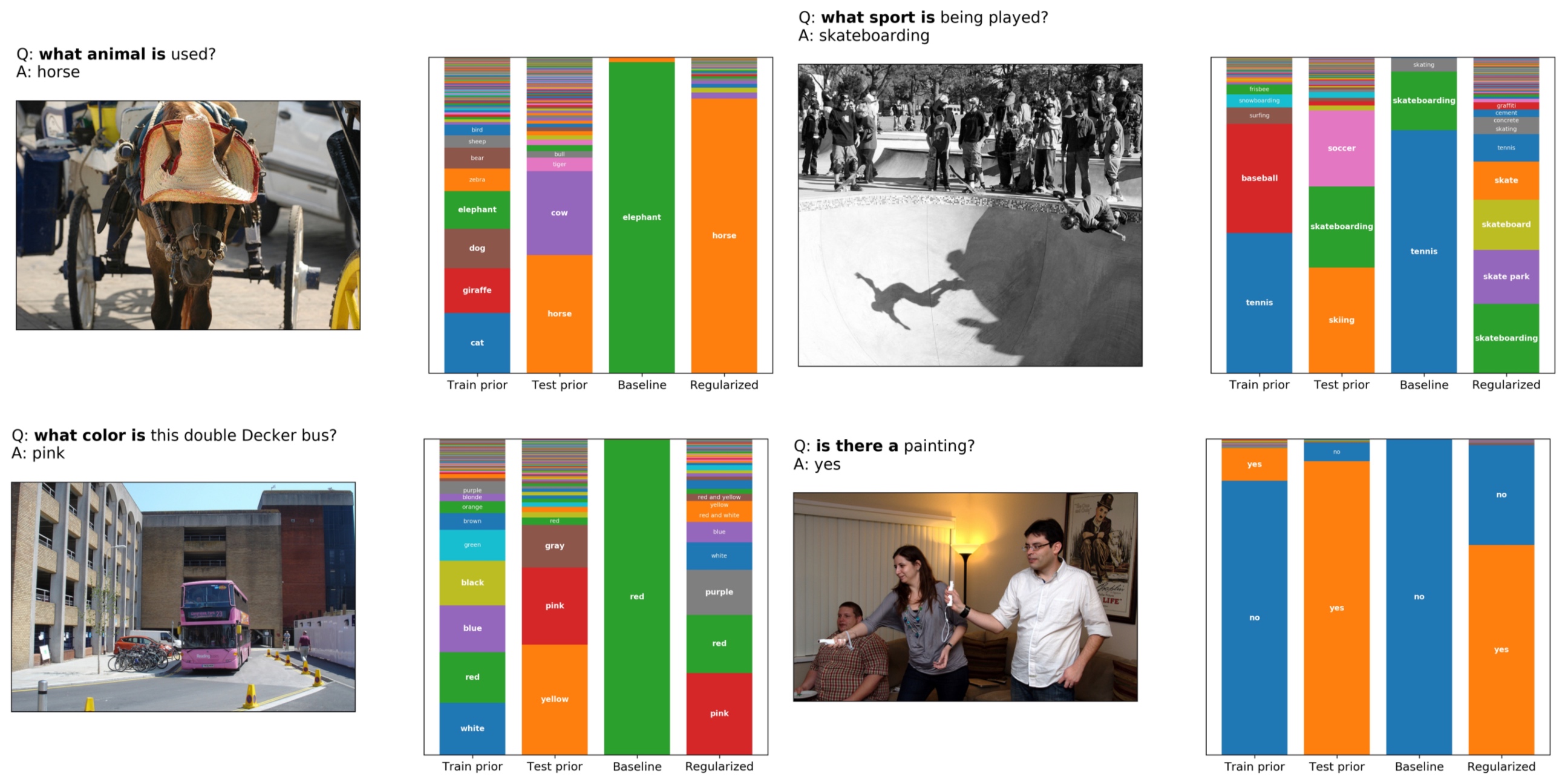

Adversarial Regularization for Visual Question Answering

Visual question answering (VQA) models have been shown to over-rely on linguistic biases in VQA datasets, answering questions "blindly" without considering visual context. Adversarial regularization (AdvReg) aims to address this issue via an adversary sub-network that encourages the main model to learn a bias-free representation of the question. In this work, we investigate the strengths and shortcomings of AdvReg with the goal of better understanding how it affects inference in VQA models.

Paper Code

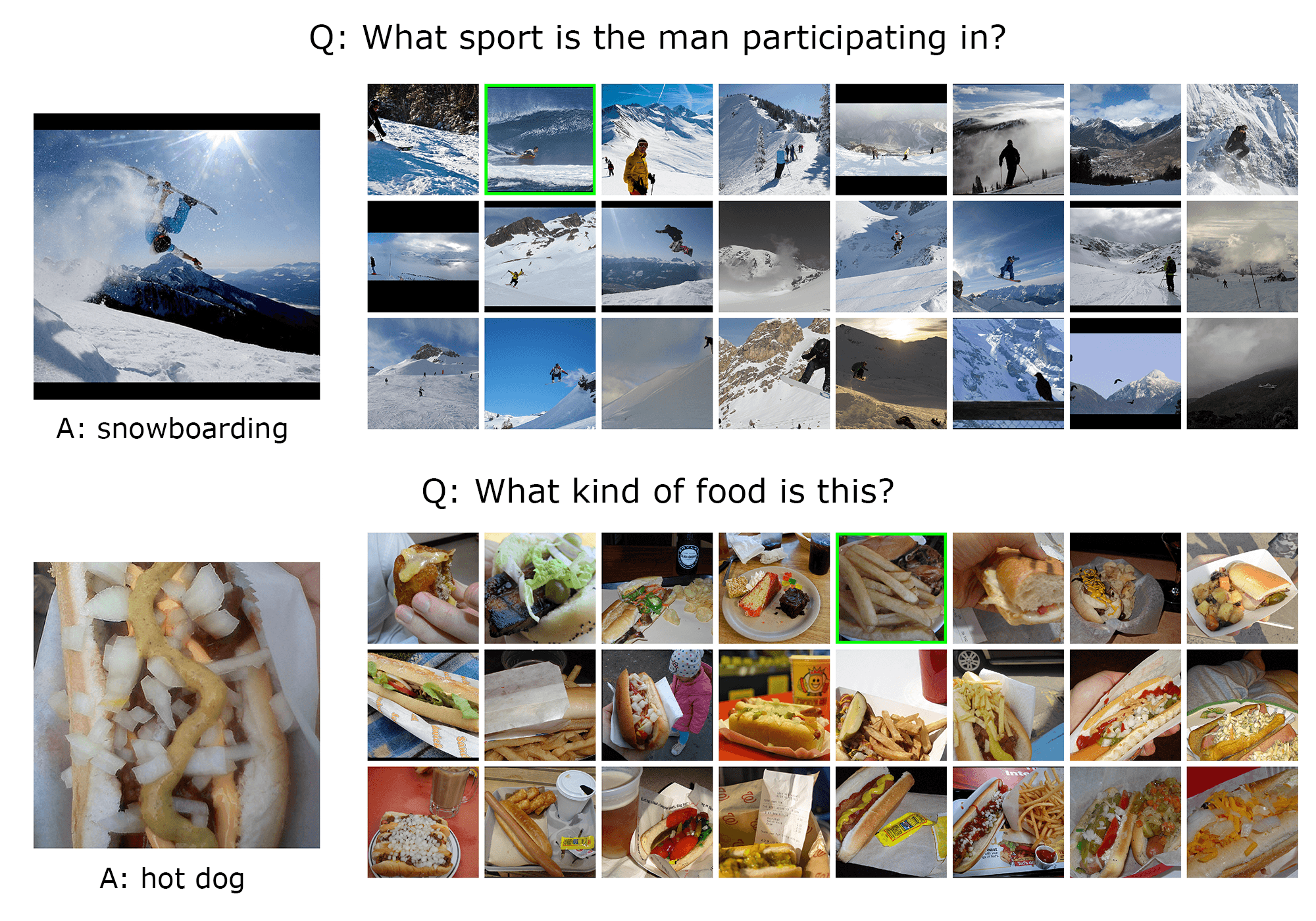

On the Flip Side

We explore a reformulation of the VQA task that challenges models to identify counterexamples: images that result in a different answer to the original question. We find that the multimodal representations learned by an existing state-of-the-art VQA model do not meaningfully contribute to performance on this task. These results call into question the assumption that successful performance on the VQA benchmark is indicative of general visual-semantic reasoning abilities.

Paper Code

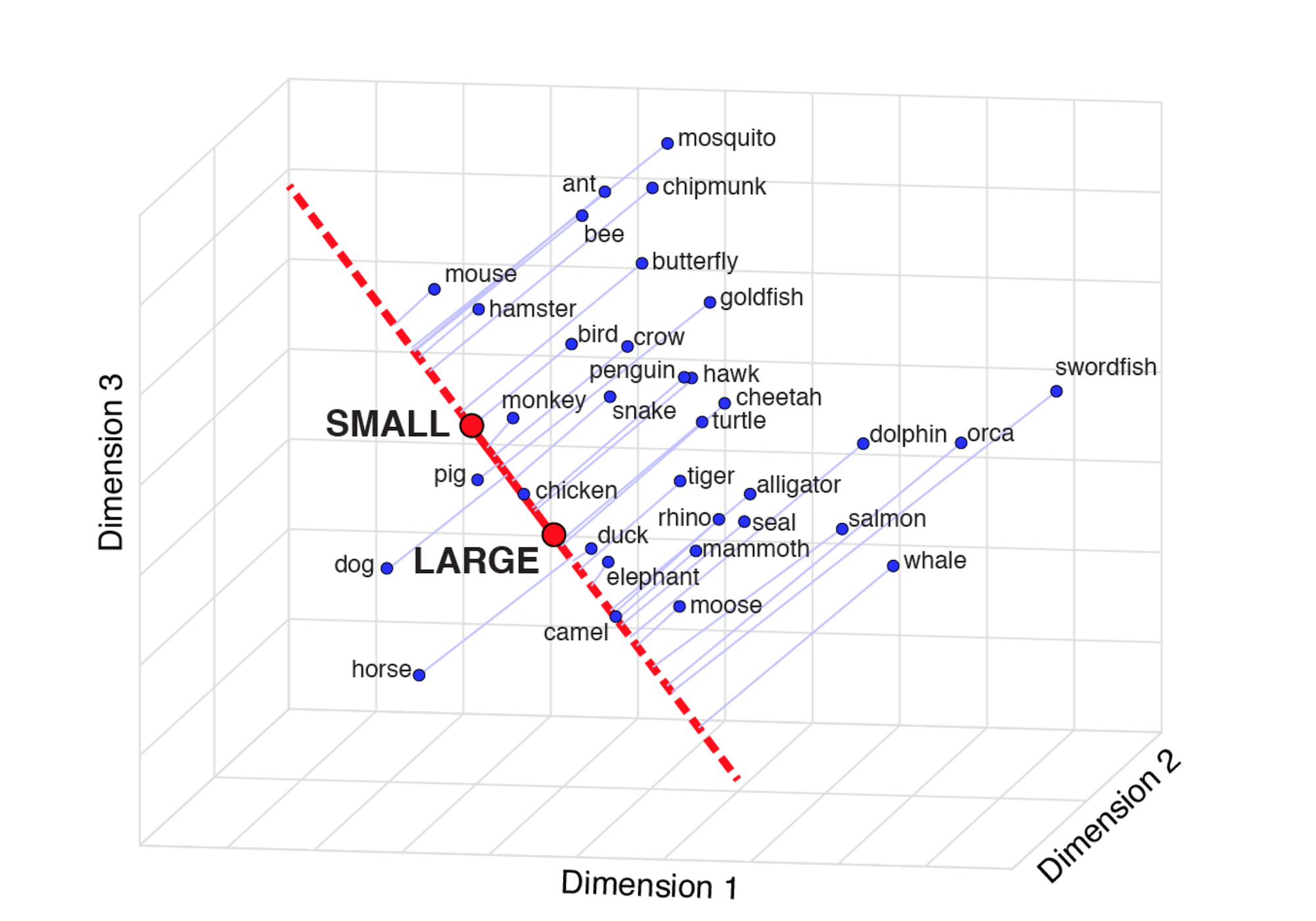

Semantic Projection

We introduce a powerful, domain-general method for recovering human knowledge from word embeddings: "semantic projection" of word-vectors onto lines that represent various object features. Our results from a large-scale Mechanical Turk study show that this method recovers human judgments across a range of object categories and properties.

Paper CodeLast updated on June 30, 2022